Restoring from LVM and VMWare disks

I recently had to restore a server that failed to boot after a power cut. This machine was a Linux VMWare host, and it had three Linux guest virtual machines that were running at the time. While we had full backups available, I decided to set myself the challenge of recovering the entire images, to save the pain of a complete rebuild.

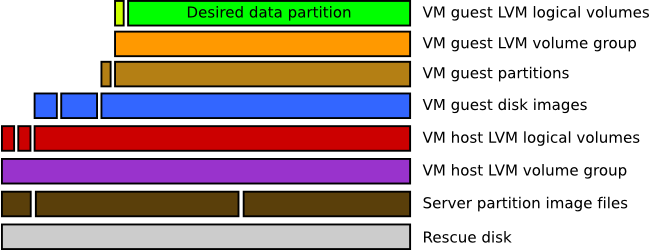

The host server partitions were LVM-formatted volumes on top of hardware RAID-1, and each of the virtual hosts were partitioned with LVM too, internally. This means that the restore process will not be at all trivial. With just a complete image of the host system, I would need to restore (deep breath) files on a partition on an LVM logical volume (inside a volume group, on a physical volume) in a VMWare hard disk stored on LVM (logical volume inside volume group of physical volumes) inside a disk image that is itself a file on a disk. How very convoluted.

I used a Gentoo system to restore the data, although any Linux system with the appropriate packages should be able to do it. No searches turned up information on doing all of this, and I had to come up with some of it myself, so I thought I would document the process.

Just to illustrate the complexity of the data layout:

Requirements

The machine that is performing the restore requires:

- Linux

- LVM2

- VMWare Server

- losetup

- NBD (Network Block Device) kernel driver

- file system drivers (if applicable)

Procedure

Boot the dead server (vm-host) using a Linux LiveCD and copy its partitions to files on another drive. If you don’t know which partitions the LVM was stored across, you can either copy all of them, or use <tt>pvscan</tt> (possibly after running <tt>vgscan</tt>) to work out which belong to the appropriate volume group. This dump may take some time.

dd if=/dev/sda3 of=/mnt/ext-drive/sda3-data

dd if=/dev/sda5 of=/mnt/ext-drive/sda5-data

Attach the drive with the partition images to the rescue machine (rescue-host), edit /etc/lvm/lvm.conf on rescue-host, and modify the filter line to allow LVM to scan loopback and network block devices. You’ll probably want to change this back afterwards, so remember what it was.

Set up the partition images as loop devices:

losetup /dev/loop1 /mnt/ext-drive/sda3-data

losetup /dev/loop2 /mnt/ext-drive/sda5-data

Scan for and activate the volume group:

vgchange -ay

Find the logical volume with the data we’re after (LogVol06 in volume group VolGroup00 in this case) and mount it:

mount /dev/VolGroup00/LogVol06 /mnt/rescue

Copy the VMWare hard disk files out of the LVM mount, because we know that the volume group stored on them will conflict with the existing one:

Check the partition table on the virtual hard disk:

This will give you something like:

VMware for Linux - Virtual Hard Disk Mounter

Version: 1.0 build-80004

Copyright 1998 VMware, Inc. All rights reserved. -- VMware Confidential

--------------------------------------------

Nr Start Size Type Id Sytem

-- ---------- ---------- ---- -- ------------------------

1 63 208782 BIOS 83 Linux

2 208845 16563015 BIOS 8E Unknown

The second partition (with type 8E) is the one we wanted in this case, but it’s LVM-formatted. This means we can’t use vmware-mount because an LVM partition cannot be mounted normally.

We need to unmount the currently-active volume group on the loopback devices (i.e. the LVM from vm-host):

# repeat the next line for all volume groups from the loopback devices

vgchange -an VolGroup00

losetup -d /dev/loop0

losetup -d /dev/loop1

losetup -d /dev/loop2

Now you need a dedicated terminal to mount the LVM partition from the virtual hard disk, as this process must stay running in order to access the virtual drive:

If you have more than one virtual partition as part of the LVM, you’ll need to have one terminal for each, and select partition numbers and NBD device numbers as appropriate.

Now find the volume group again:

vgchange -ay

Find the appropriate logical volume and mount it:

mount /dev/VolGroup00/LogVol06 /mnt/rescue

Do whatever you need to do with the data, then unmount it all:

vgchange -an VolGroup00

# either press ctrl-c in the vmware-loop terminal(s), or:

killall -INT vmware-loop

# then finally refresh the volume group list to remove old entries

vgscan

And that’s it! I hope this helps someone other than me…

Leave a Reply